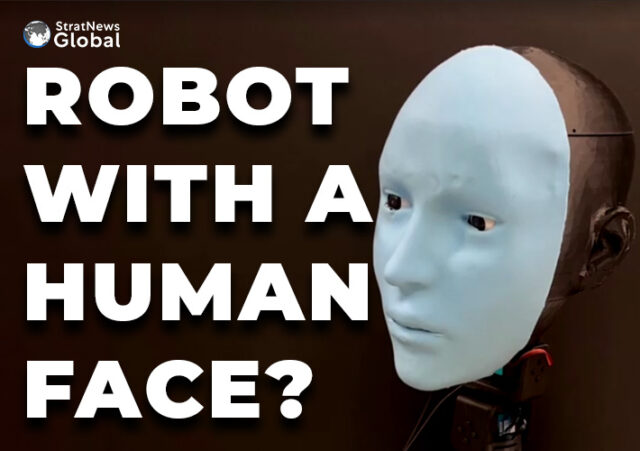

Engineers at Columbia University’s Creative Machines Lab have developed Emo, a robot capable of mimicking human facial expressions.

While robots have made strides in verbal communication through advancements like ChatGPT, their ability to express facial cues has lagged. The researchers believe Emo is a significant advance in non-verbal communication between humans and robots.

Emo, described in a study published in Science Robotics, can anticipate human facial expressions and mimic them simultaneously, even predicting a forthcoming smile around 840 milliseconds (0.84 seconds) before it happens.

The study’s lead author explained how the team faced the challenges of developing a mechanically expressive face and determining when to generate natural, timely expressions.

“The primary goal of the Emo project is to develop a robotic face that can enhance the interactions between humans and robots,” explained PhD student and lead author, Yuhang Hu.

“As robots become more advanced and complicated like those powered by AI models, there’s a growing need to make these interactions more intuitive,” he added.

Emo’s human-like head uses 26 actuators for a range of facial expressions and is covered with silicone skin. It features high-resolution cameras in its eyes for lifelike interactions and eye contact, crucial for non-verbal communication. The team used AI models to predict human facial expressions and generate corresponding robot facial expressions.

“Emo is equipped with several AI models including detecting human faces, controlling facial actuators to mimic facial expressions and even anticipating human facial expressions. This allows Emo to interact in a way that feels timely and genuine,” Hu added.

The robot was trained using a process termed “self-modelling”, wherein Emo made random movements in front of a camera, learning the correlation between its facial expressions and motor commands. After observing videos of human expressions, Emo could predict people’s facial expressions by noting minor changes as they intend to smile.

The team says the study marks a shift in human-robot interaction (HRI), allowing robots to factor in human expressions during interactions, improving interaction quality and fostering trust.

The team plans to integrate verbal communication into Emo to allow the robot “to engage in more complex and natural conversations.”

With inputs from Reuters